One of the most important artifacts in Service-oriented Architecture (SOA) is the service contract. The contract defines how service producers and consumers interact with each other. Most people agree that a contract first approach is the preferred way of creating service contracts. WSDL based service contracts are very common. In many projects they are generated by the respective frameworks, such as Apache CXF or Microsoft WCF. This is dangerous, as the developer looses control over the contract. This can result in missing interoperability and limited reusabilty expecially in cross platform scenarios.

From what I see, most developers know how to work with their favourite IDE, but only a few know how to create a proper service contract from scratch. I think that is caused by the tool vendors, which in most cases have better support for code based approaches.

In this post I would like to show how to create a portable contract from scratch and share some best practices.

The domain model

The first thing to think about is the domain or entity model that the service should expose. The model should be modeled in XML Schema. XML Schema is standard, portable and supported on all platforms. In fact it is a good idea to use XML Schema as the single source of model information.

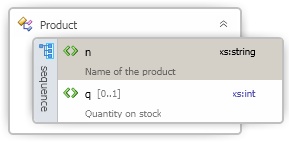

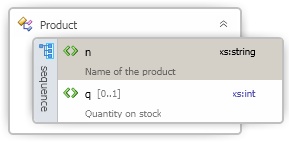

The following listing defines the entity Product:

Name of the product

Quantity on stock

The entity Product has two attributes name and quantity. Please note that the xml elements (line 10 and 15) are abbreviated. This helps to reduce the message size on the wire, expecially important in WANs. The downside is obviously reduced readability. That is not really a problem as the contract should be documented anyway using the standard XML Schema annotation elements as shown in line 11 to 13.

This is the result:

Wouldn’t it be nice to have a common base class in order to create a more generic service contract?

Luckily XML Schema allows that. Let’s create another schema BaseTypes.xsd in which we define the base types.

Unique key

Status of the entity

This base type Entity (line 24) defines common attributes for all entities. In XML Schema one can define enumerations as well (line 8 and 15). The enumeration EntityStatus defines the state of the entity. This is important in scenarios in which the entities are detached from the database. By marking the entities, it is easy to implement change tracking on the consumer side and just return the changed or deleted parts to the server. This saves network and processing time. EntityType is used later in our service interface to request different types of entities.

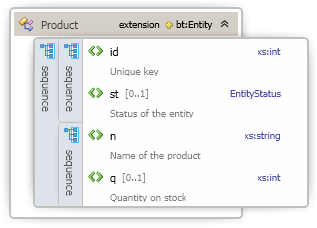

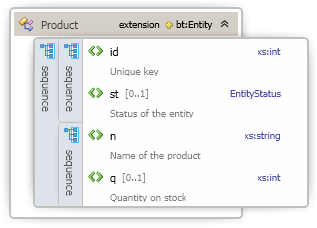

We can now import the base types into Types.xsd and change the Product type:

Name of the product

Quantity on stock

You can see the import in line 9. In line 12 and 13 the base type is added to the Product type.

This is the result:

Now we have our model completely defined in XML Schema. By using imports and type inheritance one can create very modular entity models. Those models can be reused without any change by interface definitions such as WSDL and WADL for SOAP or REST services. Moreover the code models (Java, C#, etc.) can be fully generated from the schema. Every good framework has a generator tool on board. For instance Apache Axis/wsdl2java, Apache CXF/wsd2java, Silverlight/slsvcutil, .NET/svcutil, etc. The schema can be stored in a service repository in order to increase reusability amongst the organisation.

The messages

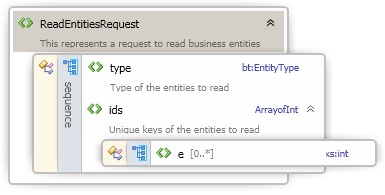

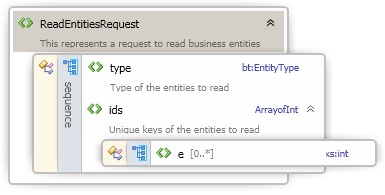

Every service operation receives a message and returns a message to the sender. Those messages should also be defined using XML Schema. The following snippet shows the message definitions. The fault messages are ommitted for the sake of simplification.

This represents a request to read business entities

Type of the entities to read

Unique keys of the entities to read

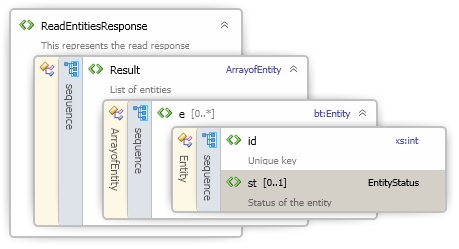

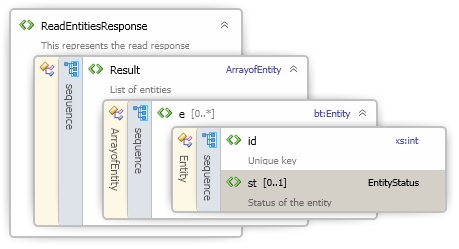

This represents the read response

List of entities

Those are our messages:

ReadEntitiesRequest and ReadEntitiesResponse are bulk operations that allow to read multiple entities with one call. As you can see ReadEntitiesResponse does not return Products but Entities. Do you remember? This is our base class type for all entities. By using the base class, the message can return arbitrary entity types in the same message. This is an example for a strongly typed but yet extensible interface.

The schemas can be reused in WSDL files:

The type information and message definitions are completely externalized to individual schemas. As a result the WSDL is small. The imported types (line 4) are used to define the WSDL messages (line 7 and 10). A WADL file would look like this:

This is the product id

The types are imported (line 8 and 9) and used to define the return message (25).

By using the respective tool, such as wsdl2java or svcutil, we can completely generate the model and messages for the client and server on JEE, .NET and other platforms.

Summary

As you can see it is not too difficult to create schema based service contracts in a platform agnostic way. If you do, you will be rewarded with a great level of control, portability and reusability. Powerful features of XML Schema, such as inheritance, allow to create robust and extensible service contracts, which are the basis for interoperability. And if you want, you can even use an XML editor. 😉